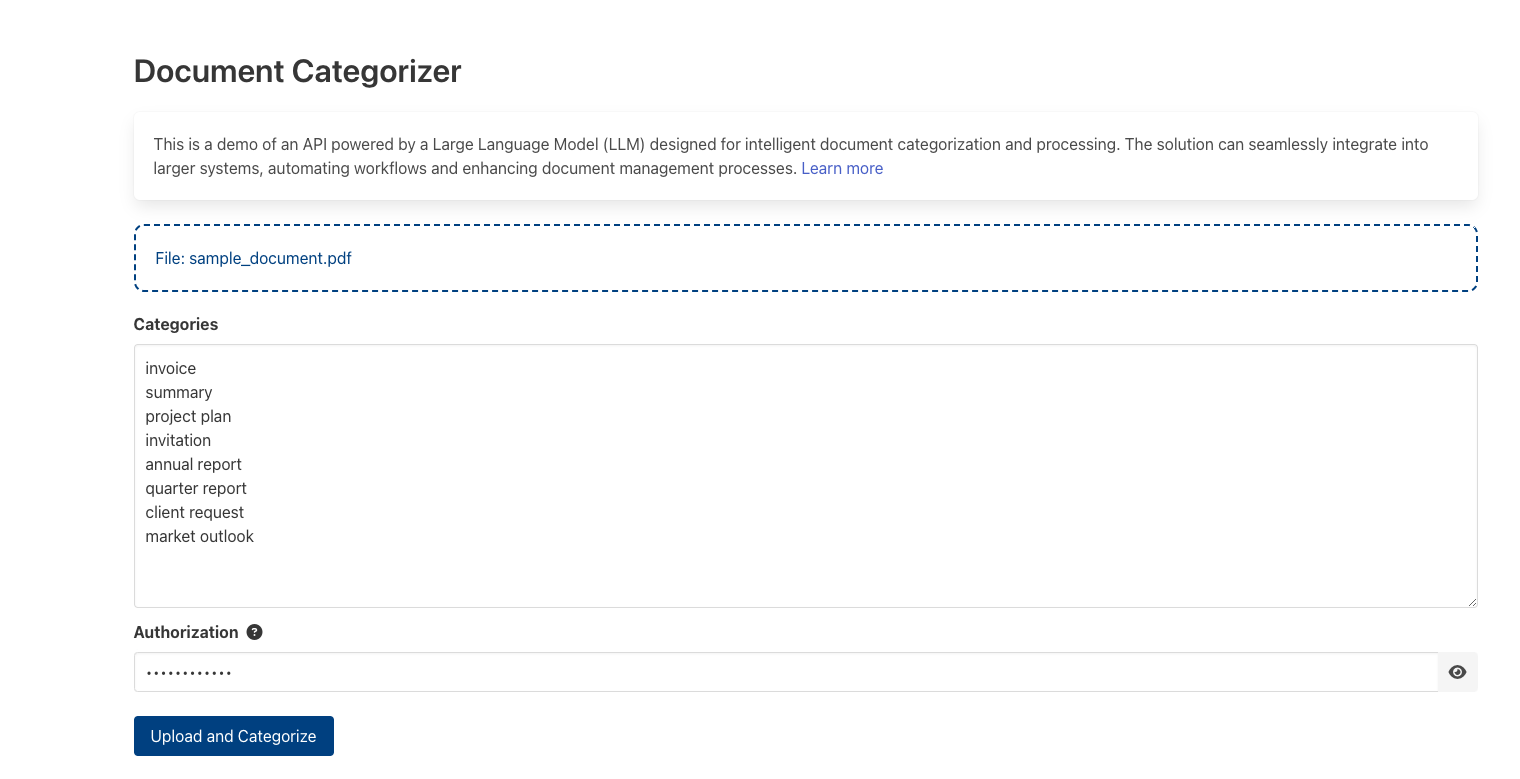

Application Overview

The main goal of the application is to streamline document processing using LLM capabilities. Users can upload one or multiple PDF documents, specify categories for classification, and authorize requests using a unique code provided by the application owner. The LLM processes the documents based on commands like “categorize received document,” but this functionality is highly flexible. Commands can be modified to perform other tasks, such as:

- Extracting specific information.

- Validating required stamps and signatures.

- Extracting product prices.

- Triggering actions based on extracted data.

Results are displayed on the frontend but can also be stored in databases, filesystems, or spreadsheets. Additionally, the application supports automatic document uploads with action triggers monitoring specific folders.

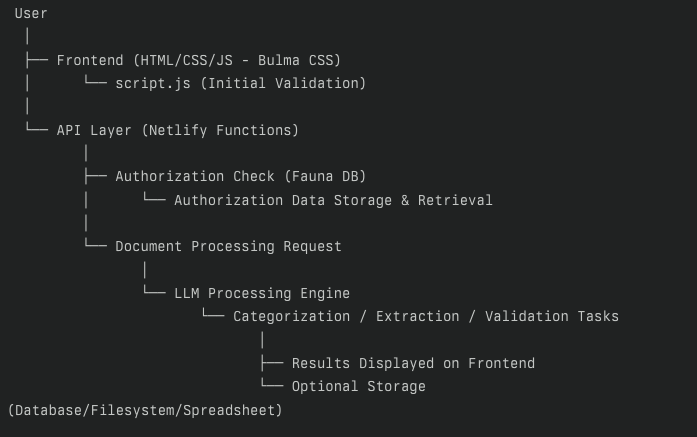

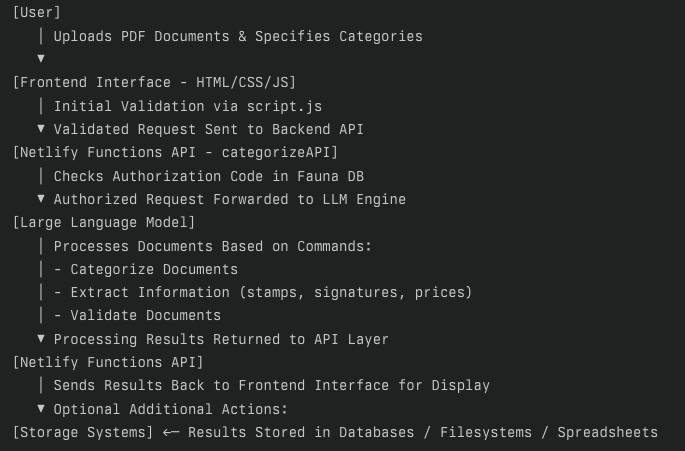

System Architecture

The application architecture integrates multiple technologies for seamless functionality:

| Component | Purpose |

|---|---|

| Netlify | Hosting HTML, CSS, and JavaScript files. |

| Bulma CSS | Styling the frontend interface. |

| Netlify Functions | Backend logic for API creation. |

| Fauna DB | Storing authorization and usage data. |

| Google Analytics | Insights into user behavior. |

| LLM | Document processing and transformation. |

One custom API was created using Netlify Functions to handle backend operations like validation, authorization checks with Fauna DB, and interaction with the LLM.

Development Process

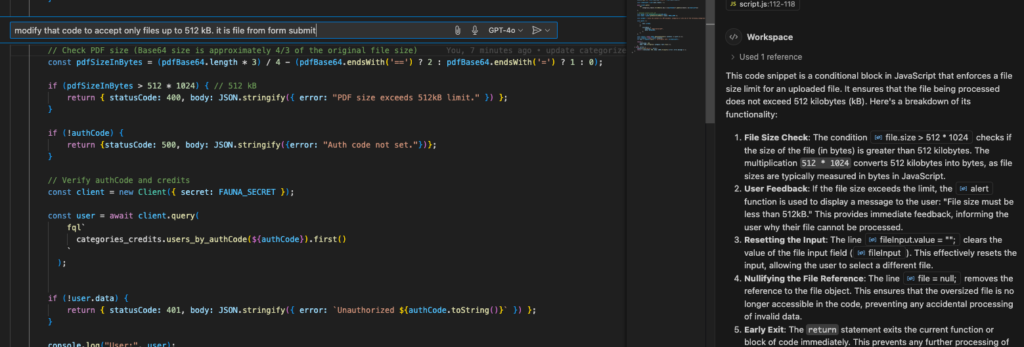

The development process was heavily aided by AI tools:

- GitHub Copilot in VS Code: Assisted in writing most of the code.

- Perplexity AI & Google Gemini: Provided answers to specific technical questions.

- Manual configurations (e.g., database setup and Netlify deployment) were completed with step-by-step guidance from LLMs.

This approach significantly reduced development time and allowed me to focus on refining functionality.

An excellent example illustrating how an LLM can exceed expectations occurred when I asked it to help secure a backend function. Initially, my code only prevented large file submissions on the frontend, leaving the backend vulnerable. The LLM not only identified this oversight but also provided a robust and accurate solution, correctly estimating the appropriate file size limits. This experience clearly demonstrates the practical value and reliability of leveraging LLMs in software development workflows.

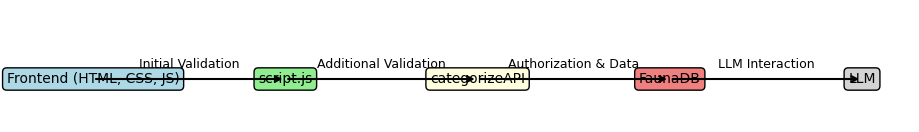

Technical Diagram

Below is the system architecture diagram illustrating the workflow:

System Architecture

Workflow Explanation:

- Frontend (HTML, CSS, JS): Handles user inputs and displays results.

- script.js: Performs initial validation of uploaded documents.

- categorizeAPI: Conducts additional validation, checks authorization via Fauna DB, and communicates with the database.

- Fauna DB: Stores authorization codes and usage data.

- LLM: Processes documents based on commands provided by the API.

Challenges Encountered During Development

While developing the application with LLM support, several challenges emerged that required manual intervention or additional effort to resolve. Here are the key obstacles:

1. Incorrect Instructions for FaunaDB FQL Queries

LLMs often provided outdated or incorrect instructions for FaunaDB queries. The Netlify driver requires FQL v10, but most solutions proposed by LLMs were based on FQL v4, leading to compatibility issues. Additionally, understanding the concepts of collections and indices in FaunaDB proved challenging, requiring significant time and experimentation to grasp fully.

2. Refactoring for Desktop and Mobile Support

Although LLMs were helpful in generating initial code for responsive design, they struggled with proper refactoring to ensure seamless functionality across desktop and mobile devices. This required manual adjustments or iterative improvements through additional instructions to achieve the desired responsiveness.

3. Difficulty with FaunaDB Documentation

FaunaDB’s documentation is not particularly user-friendly, especially when it comes to updating data for retrieved records. This lack of clarity caused delays as both the developer and the LLM encountered issues understanding how to perform these operations effectively.

4. Placement of JS and CSS Files

Organizing JavaScript and CSS files into the correct sections or directories did not work as expected in some cases. While LLMs provided guidance, certain parts of the code still required manual corrections to ensure proper functionality.

What Went Easier Than Expected

Despite the challenges, some aspects of the development process were surprisingly smooth:

1. Learning and Deploying Code to Netlify

Netlify proved to be an intuitive platform for hosting and deploying the application. The deployment process was straightforward, requiring minimal effort thanks to its user-friendly interface.

2. Local Testing of Netlify Applications

Testing the application locally using Netlify’s CLI tools was efficient and allowed for quick debugging before deployment.

3. Testing FQL Queries in FaunaDB Shell

The FaunaDB shell provided a convenient environment for testing FQL queries directly, making it easier to validate query logic without relying solely on external tools or code execution.

These experiences highlight both the strengths and limitations of using LLMs in development workflows, emphasizing the importance of combining AI assistance with manual expertise for optimal results.

Conclusion

This project demonstrates how LLMs can revolutionize document processing by enabling flexible workflows that adapt to various business needs. With minimal manual intervention during development, AI tools proved invaluable in creating a robust application that simplifies complex tasks while remaining extensible for future enhancements.

Application is available here: https://categorize.netlify.app/

Source code: https://github.com/plusz/categorize/

Please contact me to receive credits for documents processing or integrations.